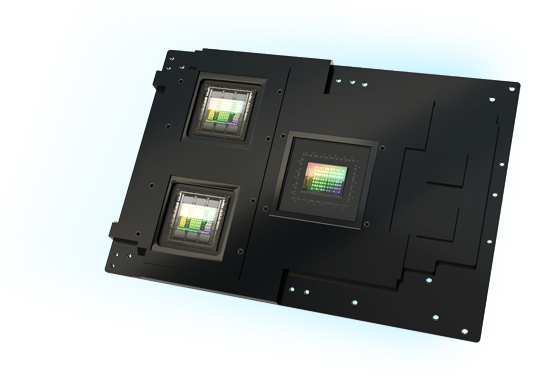

NVIDIA GB200 Grace

Blackwell Superchip

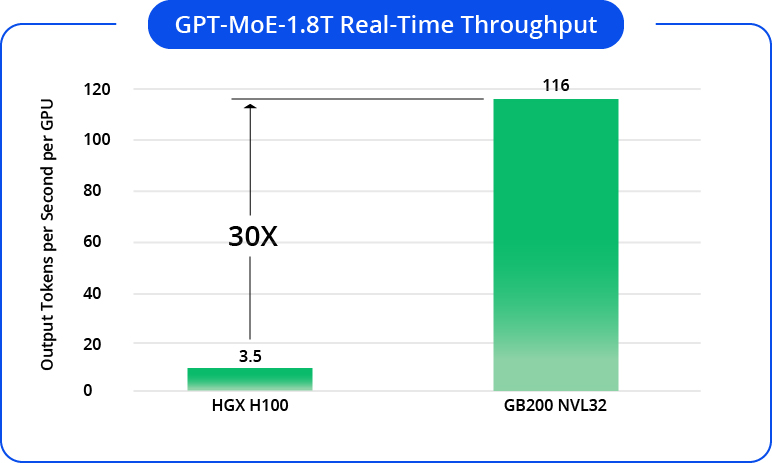

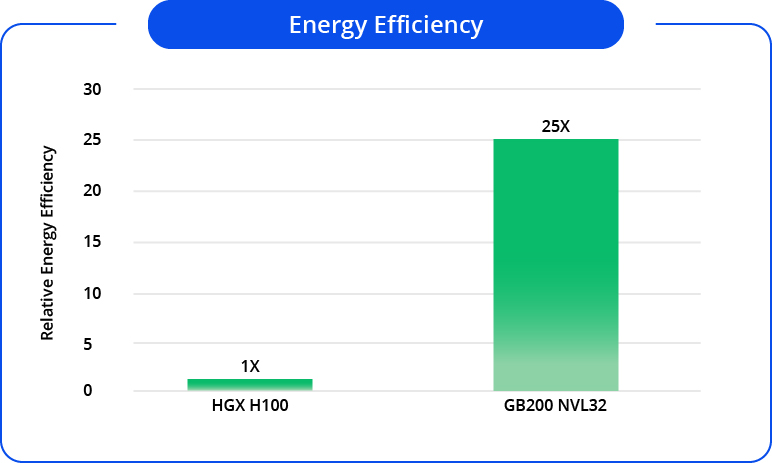

The NVIDIA GB200 Grace Blackwell Superchip arrives as the flagship of the Blackwell architecture, catapulting generative AI to trillion-parameter scale and delivering 30X faster real-time large language model (LLM) inference, 25X lower TCO, and 25X less energy. It combines two Blackwell GPUs and a Grace CPU and scales up to the GB200 NVL72, a 72-GPU NVIDIA® NVLink®-connected that acts as a single massive GPU.

QCT NVIDIA GB200 Grace Blackwell Superchip & NVIDIA GB200 NVL72 Products (NVIDIA MGX™ Architecture)

The NVIDIA GB200 Grace Blackwell Superchip supercharges next-generation AI and accelerated computing. Acting as a heart to a much larger system, the NVIDIA GB200 Grace Blackwell Superchip can scale up to the NVIDIA GB200 NVL72. This is the first architecture with rack level fifth-generation NVIDIA® NVLink®, connecting 72 high-performance NVIDIA B200 Tensor Core GPUs and 36 NVIDIA Grace™ CPUs to deliver 900 GB/s of bidirectional bandwidth.

With NVIDIA® NVLink® Chip-to-Chip (C2C), applications have coherent access to a unified memory space to eliminate complexity and speed up deployment. This simplifies programming and supports the larger memory needs of trillion-parameter LLMs, transformer models for multimodal tasks, models for large-scale simulations, and generative models for 3D data.

Additionally, the NVIDIA GB200 NVL72 uses NVIDIA® NVLink® and cold-plate-based liquid cooling to create a single massive 72-GPU rack that can overcome thermal challenges, increase compute density, and facilitate high-bandwidth, low-latency GPU communication.

Performance Result

To accelerate performance for multitrillion-parameter and mixture-of-experts AI models, the latest iteration of NVIDIA NVLink® delivers groundbreaking 1.8TB/s bidirectional throughput per GPU, ensuring seamless high-speed communication among up to 576 GPUs for the most complex LLMs.

(Source: NVIDIA®)

Liquid-cooled GB200 NVL72 racks reduce a data center’s carbon footprint and energy consumption. Liquid cooling increases compute density, reduces the amount of floor space used, and facilitates high-bandwidth, low-latency GPU communication with large NVLink domain architectures. Compared to NVIDIA H100 air-cooled infrastructure, GB200 delivers 25X more performance at the same power while reducing water consumption.

(Source: NVIDIA®)

QCT Servers Powered by NVIDIA

QCT NVIDIA MGX™-based Systems such as the QuantaGrid S74G-2U, QuantaEdge EGX77GE-2U and upcoming NVIDIA Grace™ Blackwell servers allow different configurations of GPUs, CPUs and DPUs, shortening the time frame for building future compatible solutions. Based on the modular reference design, these configurations can not only support future accelerators, but also meet the requirements of diverse workloads, including those that incorporate liquid cooling, to shorten the development journey and reduce time to market.

- Accelerates Time to Market

- Multiple Form Factors to Offer Maximum Flexibility

- Runs Full NVIDIA Software Stack to Drive Acceleration Further

QCT NVIDIA GB200 Grace Blackwell Superchip

- New class of rack-scale architecture interconnecting 36 NVIDIA Grace™ CPUs and 72 Blackwell GPUs

- Supports up to 2x NVIDIA GB200 Grace Blackwell Superchip in a 2U form factor

- Cold plate-based liquid cooling design to meet the thermal challenge from high-powered GPUs

- Designed to handle 30X faster real-time trillion-parameter LLM inference AI models

NVIDIA MGX™ Modular Architecture

- Powered by NVIDIA Grace™ Hopper Superchip

- NVIDIA® NVLink®-C2C high-bandwidth and low-latency interconnect

- Optimized for memory intensive inference and AI workloads

NVIDIA MGX™ Modular Architecture

QuantaEdge EGX77GE-2U

- Powered by the NVIDIA Grace™ Hopper Superchip

- NVIDIA® NVLink®-C2C high-bandwidth and low-latency interconnect

- Thermal enhancements for critical environments

- O-RAN compliant 5G vRAN system

- Multi-Access Edge Computing (MEC) server

NVIDIA MGX™ Modular Architecture

QuantaGrid D74S-1U

- New class of rack-scale architecture interconnecting 32 NVIDIA Grace Hopper™ Superchips via NVIDIA® NVLink®.

- Supports up to 2x 1000W TDP NVIDIA® GH200 144GB Grace Hopper™ Superchips in a 1U form factor.

- Cold plate-based liquid cooling design to meet the thermal challenge from high-powered GPUs.

- Designed to handle terabyte-class AI models.

NVIDIA MGX™ Modular Architecture

Delivering Higher Performance, Faster Memory, and Massive Bandwidth for Compute Efficiency

Bandwidth over PCle Gen 5 for GPU-GPU interconnection with 900GB/s for NVIDIA® NVLink® Bridge

Al Training performance (NVIDIA HGX™ H100 compared to NVIDIA HGX™ A100)

Increased system bandwidth and reduced latency. Direct data path between GPU memory and storage through NVIDIA GPUDirect® Storage and GPUDirect® RDMA

- Support NVIDIA HGX™ H200 8-GPU, H100 8- GPU

- Future proof design for NVIDIA HGX™ Blackwell GPU

- Modular motherboard tray design for both Intel and AMD platforms

- Liquid cooling available for both Intel and AMD platforms

QuantaGrid D74A-7U

- 4th Gen AMD EPYC™ processors, up to 400W TDP, future compatible with next-gen AMD EPYC™ processors

- Supports NVIDIA HGX™ B200, HGX™ B100, and HGX™ H100

- System optimized for Generative AI, Large Language Models (LLMs) and HPC workloads

- Modularized system design for optimal flexibility and easy serviceability

- Liquid cooling available

NVIDIA HGX™ Architecture

- 5th/4th Gen Intel® Xeon® Scalable processors, up to 350W TDP

- Supports NVIDIA HGX™ B200, HGX™ B100, and HGX™ H100

- System optimized for Generative AI, Large Language Models (LLMs) and HPC workloads

- Modularized system design for optimal flexibility and easy serviceability

- Liquid cooling available

NVIDIA HGX™ Architecture

Bandwidth over PCle Gen 5 for GPU-GPU interconnection with 600GB/s for NVIDIA® NVLink® Bridge.

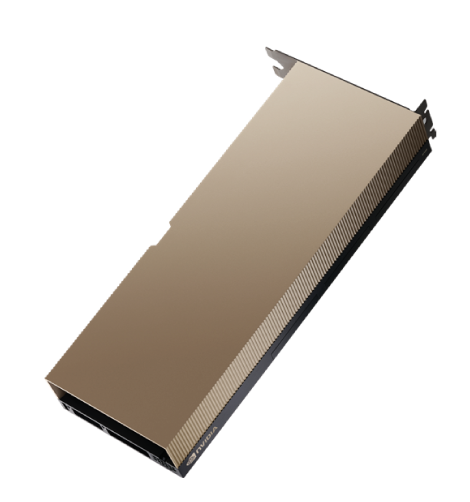

QCT has adopted a rich portfolio of NVIDIA® cutting edge GPUs to accelerate some of the world’s most demanding workloads including Al, HPC and data analytics, pushing the boundaries of innovation from cloud to edge.

Powered by NVIDIA® GPUs

- Powered by 5th/4th Gen Intel® Xeon® Scalable processors

- Powered by NVIDIA® GPUs

- PCle 5.0 & DDR5 platform ready

- Up to 4x DW accelerators or 8x SW accelerators

- Supports active type and passive type accelerators

- Up to 10x PCIe 5.0 NVMe drives to speed up data-loading

- PCle 5.0 400Gb networking for scale-out

- Enhanced serviceability with tool-less, hot-plug designs

Powered by NVIDIA® GPUs

- Powered by AMD EPYC™ 7003X series processors

- Powered by NVIDIA® GPUs

- Flexible acceleration card configuration optimized for both compute and graphic intensive workloads

- Up to 128 CPU cores with 8TB memory capacity to feed high throughput accelerator cards

- Up to 2x HDR/200GbE networking for cluster computing

Powered by NVIDIA® GPUs

- Powered by dual 5th/4th Gen Intel® Xeon®processors

- Powered by NVIDIA® GPUs

- PCIe 5.0 & DDR5 platform ready

- Up to 4x dual-width accelerators or 8x single-width accelerators

- Supports active type and passive type accelerators

- Up to 10x PCIe 5.0 NVMe SSDs to speed up data-loading

- PCIe 5.0 400Gb networking for scale-out

- Supports liquid cooling

Powered by NVIDIA® GPUs

- Powered by AMD EPYC™ 9004 series processors

- Designed with dual SP5 Genoa/ Bergamo/ Genoa-X processors

- Powered by NVIDIA® GPUs

- PCIe 5.0 & DDR5 platform ready

- High- density design that supports dual processors in a 1U system

- Up to 2x SW accelerators

- Supports up to 5x PCIe 5.0 expansion slots

Powered by NVIDIA® GPUs

- Powered by AMD EPYC™ 9004 series processors

- Designed with single SP5 Genoa/ Bergamo/ Genoa-X processors

- Powered by NVIDIA® GPUs

- PCIe 5.0 & DDR5 platform ready

- Supports up to 2x SW accelerators in a 1U system

- Supports up to 5x PCIe 5.0 expansion slots with single processor

Powered by NVIDIA® GPUs

- Powered by dual 5th/4th Gen Intel® Xeon®processors

- Powered by NVIDIA® GPUs

- PCIe 5.0 & DDR5 platform ready

- Offers 16x E1.S or 12x 2.5 NVMe flash drives

- Up to 3x SW accelerators in a 1U chassis

- Supports up to 400GbE networking bandwidth in x16 PCIe 5.0 slots

- Enhanced serviceability with tool-less, hot-swap designs

- NEBS-compliant for telco/5G data center deployments

- Supports liquid cooling

QCT and NVIDIA have worked together to push the boundaries of innovation with a variety of accelerated infrastructures powered by NVIDIA, enabling multiple use cases across different verticals.

In terms of smart manufacturing, QCT has integrated NVIDIA technologies such as NVIDIA CloudXR™, NVIDIA Omniverse™ and NVIDIA® CUDA® with QCT OmniPOD Enterprise 5G Solution to pre-validate virtual tour, production line simulation and object detection use cases.

Regarding HPC and AI infrastructures, QCT POD has leveraged NVIDIA GPUs, InfiniBand and CUDA to deliver better performance, allowing HPC and AI technologies to be run under one system architecture with a cloud-native scheduler and data tiering tools.

QCT has also developed QCT NVIDIA AI Enterprise Solution (NVAIE) that takes advantage of the NVIDIA AI Enterprise suite and a virtualization platform to help enterprises run AI applications.

Watch Videos

QCT NVIDIA MGX™ Systems

QCT Cutting-Edge Infrastructures

and Solutions Powered by NVIDIA®

QCT QoolRack - Liquid to Air

Cooling Solution

QCT NVIDIA MGX™ Systems

QCT Cutting-Edge Infrastructures and Solutions Powered by NVIDIA®

QCT QoolRack - Liquid to Air Cooling Solution