NVIDIA GB300 Grace™ Blackwell Ultra Superchip

The NVIDIA GB300 Grace™ Blackwell Ultra Superchip marks a new milestone in the AI landscape, combining groundbreaking advancements in networking with unmatched computational power and memory. With 288GB of HBM3e memory, 1.5x increase in AI FLOPS, and integration with NVIDIA Quantum-X800 InfiniBand and Spectrum™-X Ethernet, it delivers breakthrough performance for AI reasoning, agentic AI, and video inference applications.

NVIDIA GB300 NVL72

The NVIDIA GB300 NVL72 brings enhanced compute and memory capabilities to the next generation of AI and accelerated computing with 72 interconnected GPUs acting as one gigantic GPU.

Additionally, the NVIDIA GB300 NVL72 uses NVIDIA NVLink™ and showcases energy-efficient liquid cooling. These innovations minimize one’s carbon footprint, optimize energy usage, and maximize compute density, all while enhancing GPU communication through high-bandwidth and low-latency integration. With such features, QCT servers accelerated by the NVIDIA Blackwell Ultra GPUs deliver exceptional performance for various AI applications.

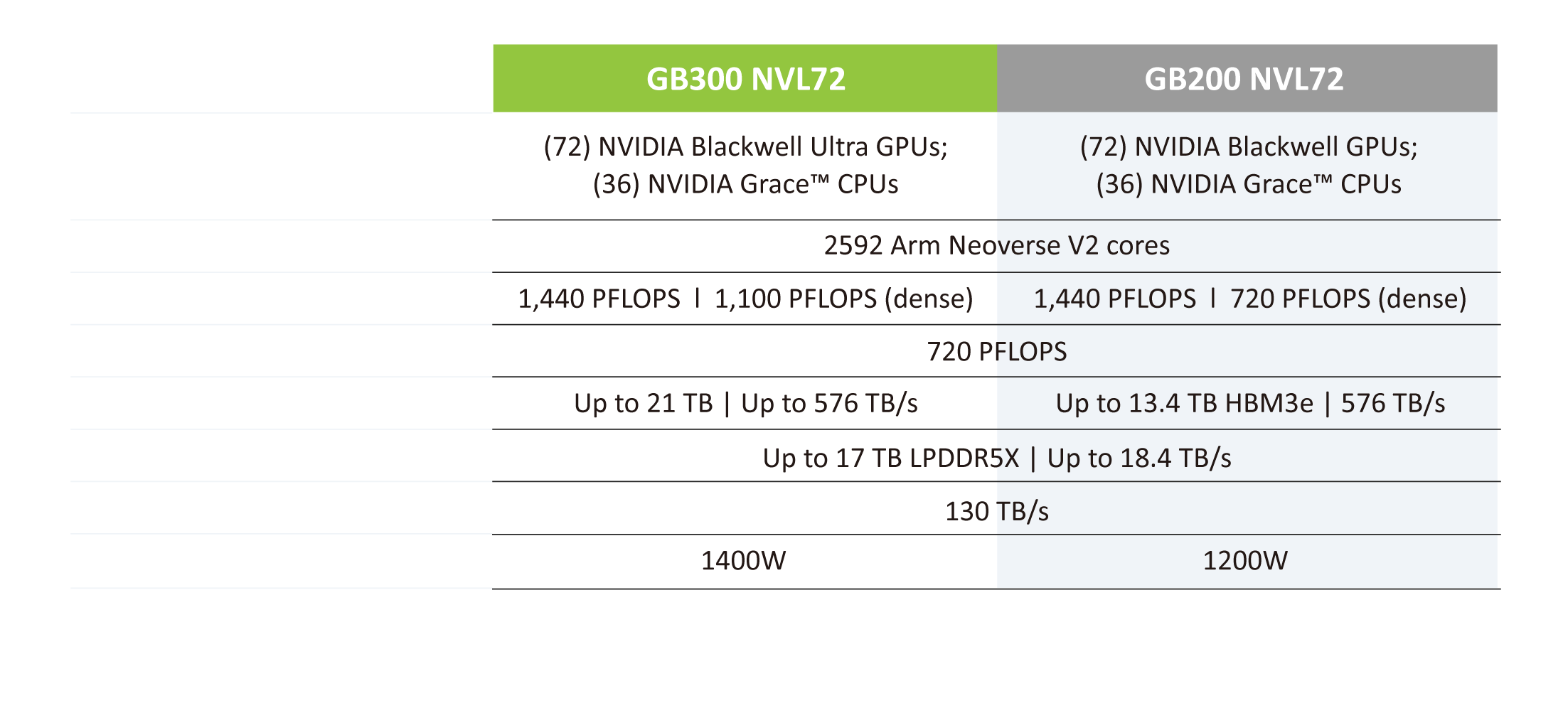

Gen-to-Gen Comparsion

QCT Servers Accelerated by NVIDIA

Discover the power of QCT’s cutting-edge infrastructures accelerated by NVIDIA Blackwell Ultra GPU, NVIDIA Grace Blackwell Superchip, and the latest NVIDIA data center PCIe GPUs. These QuantaGrid systems not only support future accelerators and liquid cooling to meet diverse workload needs, but also feature flexible, modular designs to speed up development and reduce time–to–market.

- Accelerates Time to Market

- Multiple Form Factors to Offer Maximum Flexibility

- Runs Full NVIDIA Software Stack to Drive Acceleration Further

The NVIDIA GB300 NVL72 brings enhanced compute and memory capabilities to the next generation of AI and accelerated computing with 72 interconnected NVIDIA Blackwell Ultra GPUs acting as one gigantic GPU.

This rack-level solution can be seamlessly integrated with existing NVIDIA GB200 NVL72 infrastructure, delivering higher performance with efficient liquid cooling. The design reduces energy use while maximizing compute density and optimizing floor space.

QuantaGrid D75U-1U

- Accelerated by NVIDIA GB300 Grace™ Blackwell Ultra Superchips

- 288GB of HBM3e memory capacity allows for larger batch sizing and maximum throughput

- NVIDIA ConnectX®-8 SuperNIC delivers 800 Gb/s connectivity per GPU

- CX8 supports PCIe switch function, removing IPEX BD and cables for simplified system design

- Compute tray of GB200 and GB300 unified as Bianca, leveraging NVIDIA MGX™ Modular Design

| Platform | (2) NVIDIA GB300 Grace Blackwell Ultra Superchip | Processor | (1) NVIDIA Grace™ CPU | GPU | (2) NVIDIA Blackwell Ultra GPUs | Memory |

CPU: Up to 512GB (480GB) LPDDR5X per CPU GPU: Up to 288GB (279GB) HBM3e embedded per GPU |

Storage | (4) E1.S 15mm PCIe SSDs, (8) slots available | Onboard Storage | (1) PCIe M.2 22110/2280 SSDs | Networking | (1) NVIDIA® BlueField®-3 B3240 dual port 400G DPU (4) NVIDIA® ConnectX®-8 800Gb OSFP ports |

Power | 48-54V DC bus bar | Dimensions | (W) 438 x (H) 43.6 x (D) 766mm |

The NVIDIA GB200 NVL72 is a powerhouse that connects 36 NVIDIA Grace™ CPUs and 72 Blackwell GPUs via the NVIDIA NVLink™-C2C interconnect. Functioning as a single, colossal GPU, this liquid-cooled, rack-scale system is designed to navigate the complexities of trillion-parameter AI models with unprecedented ease.

QuantaGrid D75B-1U

- Accelerated by dual NVIDIA GB200 Grace™ Blackwell Superchips

- CPU and GPU Connected by NVIDIA NVLink™

- Optimized serviceability with a front-access, tool-less, hot-pluggable design

- Liquid-cooled NVIDIA MGX architecture

| Platform | (2) NVIDIA GB200 Grace Blackwell Superchip | Processor | (2) NVIDIA Grace™ CPUs | GPU | (4) NVIDIA Blackwell GPUs | Memory | CPU: Up to 480GB LPDDR5x per CPU

GPU: Up to 186GB HBM3e per GPU |

Storage | (8) hot-swappable E1.S 15mm PCIe SSDs | Onboard Storage | (1) PCIe M.2 22110/2280 SSDs | Networking | (2) NVIDIA® BlueField®-3 B3240 dual port 400G DPUs (4) NVIDIA ConnectX®-7 400Gb OSFP ports |

Cooling | CPU/GPU: Liquid cooling cold plate Peripheral: (8) 4056 dual rotor fans |

Power | 48-54V DC bus bar clip | Dimensions | (W) 438 x (H) 43.6 x (D) 766mm |

QuantaGrid D75B-2U

- Accelerated by dual NVIDIA GB200 Grace™ Blackwell Superchips

- CPU and GPU Connected by NVIDIA NVLink™

- Optimized serviceability with a front-access, tool-less, hot-pluggable design

- Liquid-cooled NVIDIA MGX architecture

| Platform | (2) NVIDIA GB200 Grace™ Blackwell Superchips | CPU | (2) NVIDIA Grace™ CPUs | GPU | (4) NVIDIA Blackwell GPUs | Memory | CPU: Up to 480GB LPDDRX embedded per CPU GPU: Up to 186GB HBM3e embedded per GPU |

Storage | (8) E1.S 15mm PCIe SSDs | Onboard Storage | (1) PCIe M.2 22110/2280 | Networking | (2) NVIDIA® BlueField®-3 B3240 dual port 400G DPUs (4) NVIDIA ConnectX®-7 400Gb OSFP ports |

Cooling | CPU/GPU: Liquid cooling cold plate Peripheral: (6) 6056 dual rotor fans |

Power | 48-54V DC bus bar clip | Dimensions | (W) 438 x (H) 87 x (D) 766mm |

The QuantaGrid D75E-4U is more than just an x86-based system built on the Intel® Xeon® platform. It adheres to the NVIDIA MGX™ architecture, offering a modular design that meets diverse AI applications and customer demands. This system is compatible with a full range of NVIDIA data center PCIe GPUs, including NVIDIA RTX PRO™ 6000 Blackwell Server Edition, NVIDIA H200 NVL, NVIDIA H100 NVL, NVIDIA L40S GPU, NVIDIA L4 GPU, NVIDIA A10 GPU, and NVIDIA A16 GPU, enabling unparalleled flexibility and performance. The NVIDIA H200 NVL is particularly suited for organizations with data centers seeking low-power, air-cooled enterprise rack designs. It delivers versatile acceleration for AI and HPC workloads of all sizes, making it an ideal choice for enterprises prioritizing efficiency and scalability. With the QuantaGrid D75E-4U, customers can maximize computing power in compact spaces. The system supports flexible GPU configurations—1, 2, 4, or 8 GPUs—allowing companies to optimize their existing rack infrastructure and tailor performance to their specific requirements.

QuantaGrid D75E-4U

- Supports NVIDIA next-gen PCIe GPUs, up to 8x DW AC 600W

- All PCIe 5.0 expansion slots are designed to support up to 150W

- Remote heatsink solution for improved thermal performance

- Enhanced serviceability with tool-less, hot-pluggable designs

- Offers infinite flexibility to support any AI/HPC-related workloads

| Processor | (2) Intel® Xeon® 6 processors, up to 350W TDP | Networking | (1) Dedicated 1GbE management port | Accelerator | Air cooling: NVIDIA RTX PRO™ 6000 Blackwell Server Edition, NVIDIA H200 NVL, NVIDIA H100 NVL, NVIDIA L40S GPU, NVIDIA L4 GPU, NVIDIA A10 GPU, NVIDIA A16 GPU |

Memory | (32) DDR5 RDIMM up to 6,400 MHz, (16) MRDIMM up to 8,000 MHz | Storage | Air cooling - (4) DW GPUs: (12) hot-swappable E1.S SSDs Air cooling - (8) DW GPUs: (24) hot-swappable E1.S SSDs |

Expansion Slot | Air cooling-

(4) DW GPUs: (12) hot-swappable E1.S SSDs Air cooling - (8) DW GPUs: (24) hot-swappable E1.S SSDs |

Cooling | Air cooling (design reserved for liquid cooling) | Power | 3+1 2700W/3200W CRPS titanium PSUs | Dimensions | (W) 438 x (H) 176 x (D) 800mm |

QCT systems based on the NVIDIA MGX™ architecture, such as the QuantaGrid S74G-2U, QuantaEdge EGX77GE-2U and new NVIDIA Grace™ Blackwell servers, allow different configurations of GPUs, CPUs and DPUs, shortening the time frame for building future compatible solutions. Based on the modular reference design, these configurations can not only support future accelerators, but also meet the requirements of diverse workloads, including those that incorporate liquid cooling, to shorten the development journey and reduce time to market.

QuantaGrid S74G-2U

- Accelerated by the NVIDIA Grace Hopper™ Superchip

- First–gen NVIDIA MGX™ architecture with a modular design

- Optimized for memory-intensive inference and HPC workloads

| Processor | NVIDIA GH200 Grace Hopper™ Superchip, 1000W TDP | Memory | CPU: Up to 480GB LPDDRX embedded GPU: 144GB HBM3E memory Coherent memory between CPU and GPU with NVIDIA NVLink™-C2C interconnect with a speed of 900GB/s |

Storage | (4) hot-swappable E1.S NVMe SSDs | Networking | (1) Dedicated 1GbE management port | Expansion Slot | (3) FHFL DW PCIe 5.0 x16 slots | Dimensions | (W) 438 x (H) 87.5 x (D) 900mm |

QuantaGrid EGX77GE-2U

- First QCT edge server to be powered by the NVIDIA Grace Hopper™ Superchip

- Modular infrastructure based on the NVIDIA MGX™ architecture

- NVIDIA NVLink™-C2C high-bandwidth low-latency interconnect

- 400mm ultra short-depth design server

| Processor | NVIDIA GH200 Grace Hopper™ Superchip, 1000W TDP | Storage | Internal Storage: (2) SATA/NVMe M.2 22110/2280 SSDs External Storage: (2) E1.S SSDs |

Expansion Slot | (3) FHFL PCIe 5.0 x16 slots | Dimensions | (W) 447.8 x (H) 86.8 x (D) 400mm |

- Elevates generative AI and HPC workloads to new heights

- Future-proof drop-in compatibility for existing HGX architectures

- Modular motherboard compute tray design supporting both Intel and AMD platforms

- Liquid cooling options available for both Intel and AMD platforms

QuantaGrid D75H-10U

- Supports NVIDIA HGX™ B300, accelerated by NVIDIA Blackwell Ultra GPUs

- Features PCIe Gen 6 to enable 800G east-west data transfer, utilizing NVIDIA ConnectX®-8 SuperNICs™ to build massive-scale GPU clusters

- Supports NVIDIA® BlueField®-3 DPUs for north-south data transfer, minimizing bottlenecks and empowering the most complex AI-HPC workloads

| Processor | (2) Intel® Xeon® 6 processors, up to 350W | Networking |

(1) Dedicated LAN port (RJ45) for BMC management (1) 1GbE LAN port (RJ45) (8) OSFP ports serving (8) single-port NVIDIA ConnectX-8 SuperNICs™ |

Accelerator | (8) NVIDIA Blackwell Ultra GPUs | Storage | (8) hot-swappable E1.S SSDs (2) PCIe M.2 2280 SSDs |

Expansion Slot | (4) FHHL SW or (2) FHHL DW PCIe 5.0 x16 slots |

Dimensions | (W) 447 x (H) 441.75 x (D) 800mm |

QuantaGrid D75L-3U

- Leverages the established NVIDIA MGX™ architecture and liquid-cooling designs, ensuring reliable and stable supply of rack, power, liquid cooling and other key components

- Can be scaled up to ultra-high density rack-level GPU clusters with a compact 3U form factor. Chassis compatible with the NVIDIA MGX™ rack

- Supports NVIDIA HGX™ B300, accelerated by NVIDIA Blackwell Ultra GPUs

- Features PCIe Gen 6 to enable 800G east-west data transfer to build massive-scale GPU clusters

| Processor |

(2) Intel® Xeon® 6 processors, up to 350W | Networking |

(1) Dedicated LAN port (RJ45) for BMC management (1) 1GbE LAN port (RJ45) (8) OSFP ports serving (8) single-port NVIDIA ConnectX®-8 VPI |

Accelerator | (8) NVIDIA B300 SXM6 GPUs | Storage |

(8) hot-swappable E1.S SSDs (2) PCIe M.2 22110 SSDs |

Expansion Slot | (3) FHHL SW PCIe 5.0 x16 slots | Dimensions | (W) 438 x (H) 131.7 x (D) 864.5 mm |

QuantaGrid D75M-5U

- Accelerated by the NVIDIA HGX™ H200 8-GPU baseboard

- Powered by 2x AMD EPYC™ 9004/9005 Series processors

- Liquid cooled by design

- Modularized design for easy serviceability

- Multiple-GPU server for HPC/AI Training

| Processor | (2) AMD EPYC™ 9004/9005 Series processors, up to 500W (8) NVIDIA H200 SXM 8-GPU, 700W TDP | Networking | (1) Dedicated 1GbE management port | Storage | (18) hot-swappable 2.5" NVMe SSDs | Expansion Slot | (2) FHHL DW PCIe 5.0 x16 slots (8) HHHL SW PCIe 5.0 x16 slots |

Dimensions | (W) 447.8 x (H) 219.5 x (D) 950mm |

QuantaGrid D74F-7U

- Accelerated by the NVIDIA HGX™ H200 8-GPU baseboard

- Modularized system design for easy serviceability

- Supports NVIDIA DPU to do N-S data transfer, minimizing the bottleneck

- and empowering the performance for the most complex AI-HPC workloads

| Processor | (2) 5th/4th Gen Intel® Xeon® Scalable processors, up to 350W (1) NVIDIA HGX™ H200 8-GPU baseboard, 700W TDP | Networking | (1) Dedicated 1GbE management port | Storage | Front (18) hot-swap 2.5" PCIe 5.0 NVMe SSDs | Expansion Slot | (1) FHHL SW PCIe 5.0 x16 slot (1) OCP NIC 3.0 SFF PCIe 5.0 x16 slot (10) OCP NIC 3.0 TSFF PCIe 5.0 x16 slots |

Dimensions | (W) 447.8 x (H) 307.85 x (D) 886mm |

QuantaGrid D75F-7U & D74F-7U & D74H-7U

- Supports the NVIDIA HGX™ H200

- Supports flexible expansion options, including OCP or PCIe form factor for north-south & east-west data transfer

- Modularized system design for optimal flexibility and easy serviceability

- Supports NVIDIA® BlueField®-3 DPU and SuperNICs to minimize the bottleneck and empowering the performance for the most complex AI-HPC workloads

- System optimized for generative AI, LLM, and HPC workloads

| Processor |

(2) 5th/4th Gen Intel® Xeon® Gen Scalable processors, up to 350W TDP | Networking | (1) Dedicated 1GbE management port | Accelerator | (8) NVIDIA H200 SXM5 GPUs | Storage | (18) hot-swappable 2.5" NVMe SSDs | Expansion Slot |

[D75F-7U]: (2) FHHL DW PCIe 5.0 x16 slots + (8) HHHL SW PCIe 5.0 x16 slots [D74F-7U]: (1) FHHL SW PCIe 5.0 x16 slot + (1) OCP 3.0 SFF slot + (10) OCP NIC 3.0 TSFF slots [D74H-7U]: (2) OCP 3.0 SFF slots + (10) OCP NIC 3.0 TSFF slots |

Dimensions |

[D75F-7U]: (W) 447.8 x (H) 307.85 x (D) 950mm [D74F-7U/D74H-7U]: (W) 447.8 x (H) 307.85 x (D) 886mm |

QCT has adopted a rich portfolio of NVIDIA cutting edge GPUs to accelerate some of the world’s most demanding workloads including Al, HPC and data analytics, pushing the boundaries of innovation from cloud to edge.

QuantaGrid D54U-3U

- Powered by dual 5th/4th Gen Intel® Xeon® Scalable processors

- Tool-less GPU module design for easy serviceability

- Flexible GPU configurations, targeting AI inferencing, training and HPC workloads

| Processor | (2) 5th/4th Gen Intel® Xeon® Scalable processors, up to 350W TDP | Networking | (1) Dedicated 1GbE management port | Accelerator | NVIDIA H200 NVL GPU, NVIDIA RTX PRO™ 6000 Blackwell Server Edition GPU, NVIDIA H100 GPU, NVIDIA L40S GPU, NVIDIA L40 GPU, NVIDIA A16 GPU, NVIDIA A2 GPU | Storage | (10) hot-swappable 2.5" SATA/SAS/NVMe SSDs | Expansion Slot |

(1) OCP 3.0 PCIe 5.0 x16 slot (2) HHHL PCIe 5.0 x16 slots (1) HHHL PCIe 5.0 x8 slots |

Dimensions | (W) 438 x (H) 131.6 x (D) 760mm |

QuantaGrid D55Q-2U

- Accelerated by NVIDIA GPUs

- Powered by dual Intel® Xeon® 6 processors

- Adopts DC-MHS (M-FLW) to promote io

- Ultimate Compute performance and a workload-driven architecture

| Processor | (2) Intel® Xeon® 6 processors, up to 330W/350W | Networking | (1) Dedicated 1GbE management port | Accelerator | NVIDIA H100 GPU, NVIDIA L40S GPU, NVIDIA L4 GPU | Storage |

SKU -#1 (12) hot-swappable 3.5" SATA/SAS or (12) hot-swappable 2.5 NVMe SSDs SKU -#2 (24) hot-swappable 2.5" NVMe/ SATA/ SAS SSDs |

Expansion Slot |

Option1 (4) FHHL PCIe 5.0 x8 slots+ (2) HHHL PCIe 5.0 x16 slots + (1)HHHL PCIe 5.0 x8 slots + (2) OCP 3.0 slots Option2 (4) FHHL PCIe 5.0 x8 slots + (2) HHHL PCIe 5.0 x16 slots + (1) HHHL PCIe 5.0 x8 slots + (2) OCP 3.0 slots [supports SW GPU] Option3 (3) FHFL PCIe 5.0 x16 slots + (2) HHHL PCIe 5.0 x16 slots + (1)HHHL PCIe 5.0 x8 slots + (2) OCP 3.0 slots [supports DW GPU] |

Dimensions | (W) 440 x (H) 87.5 x (D) 780mm |

QuantaGrid D55X-1U

- Accelerated by NVIDIA GPUs

- Powered by Intel® Xeon® 6 processors

- Adopts DC-MHS (M-FLW) to promote open standards

- Ultimate compute performance and a workload-driven architecture

| Processor | (2) Intel® Xeon® 6 processors, up to 330W/350W | Networking | (1) Dedicated 1GbE management port | Accelerator | NVIDIA L4 GPU | Storage |

SKU - #1 (12) hot-swappable 2.5" SSDs SKU - #2 (16) hot-swappable E1.S SSDs SKU - #3 (20) hot-swappable E3.S SSDs |

Expansion Slot |

Option 1 (3) HHHL PCIe 5.0 x16 slots + (2) OCP 3.0 slots Option 2 (2) FHHL PCIe 5.0 x16 slots + (2) OCP 3.0 slots |

Dimensions | (W) 440 x (H) 43.2 x (D) 780mm |

QuantaGrid S55R-1U

- Powered by a single Intel® Xeon® 6 processor

- A cost-efficient 1U1P density compute architecture•

- Adopts DC-MHS to promote open standards

- System optimized for HPC and cloud computing

| Processor | (1) Intel® Xeon® 6 processor, up to 350W TDP | Networking | (1) Dedicated 1GbE management port | Accelerator | NVIDIA L4 GPU | Storage | (12) hot-swappable 2.5“ NVMe/SATA/SAS SSDs | Expansion Slot |

Option 1 (2) HHHL PCIe 5.0 x16 slots + (1) OCP 3.0 slot Option 2 (2) FHHL PCIe 5.0 x16 slots + (1) OCP 3.0 slot |

Dimensions | (W) 440 x (H) 43.2 x (D) 780mm |

QuantaGrid S44NL-1U & D44N-1U

- Featuring up to 3x single-width accelerators to support AI inference workloads

- Powered by a single AMD EPYC™ 9004/9005 Series processor, up to 500W TDP

- Advanced air-cooling architecture supporting top-bin CPUs

- Liquid–cooled design

- Up to 5x PCIe 5.0 expansion slots and the DC-SCM architecture to meet different configuration needs

- System optimized for cloud, enterprise, AI, HPC, networking, security and IoT workloads

| Processor |

[S44NL-1U]: (1) AMD EPYC™ 9004/9005 Series processor, up to 500W TDP [D44N-1U]: (2) AMD EPYC™ 9004/9005 Series processors, up to 500W TDP | Networking | (1) Dedicated 1GbE management port | Accelerator | NVIDIA L4 GPU | Storage |

SKU - #1 (12) hot-swappable 2.5″ NVMe/SATA/SAS SSDs SKU - #2 (16) hot-swappable E1.S SSDs |

Expansion Slot |

Option 1 (3) HHHL PCIe 5.0 x16 slots Option 2 (2) FHHL PCIe 5.0 x16 slots + (2) OCP 3.0 slots |

Dimensions | (W) 440x (H) 43.2 x (D) 780mm |

QuantaPlex S25Z-2U

- 2U2N high density front access multi-node server

- Powered by (1) Intel Xeon 6 CPU per node

- Supports SW or DW PCIe GPUs

- Up to (3) PCIe 5.0 expansion slots per node for diverse device support

| Processor | (1) Intel® Xeon® 6 processor | Networking | (1) OCP 3.0 SFF slot | Accelerator | NVIDIA L40S GPU, NVIDIA L4 GPU, NVIDIA A2 GPU | Storage | (3) hot-swappable 2.5" NVMe SSDs | Expansion Slot |

Option 1 (1) FHFL SW PCIe 5.0 x16 slot (1) FHFL SW PCIe 5.0 x8 slot (1) FHHL SW PCIe 5.0 x16 slot Option 2 (1) FHFL DW PCIe 5.0 x16 slot (1) FHHL SW PCIe 5.0 x16 slot |

Dimensions | (W) 447.8 x (H) 86.3 x (D) 875mm |

QuantaPlex S45Z-2U

- 2U4N high density front access multi-node server

- Powered by (1) Intel Xeon 6 CPU per node

- Supports (1) SW PCIe GPU per node

| Processor | (1) Intel® Xeon® 6 processor | Networking | (1) OCP 3.0 SFF slot | Accelerator | NVIDIA L4 GPU | Storage | (2) hot- swappable E1.S SSDs | Expansion Slot | (1) HHHL PCIe Gen5 x16 | Dimensions | (W) 447.8 x (H) 86.3 x (D) 875mm |

QuantaEdge EGX88D-1U

- Powered by a single Intel® Xeon® 6 processor

- 300mm ultra short-depth design

- Easy access for cable management

- Sufficient space for airflow in rack/cabinet

- Fully integrates vRAN acceleration

- Supports 24xSFP28 with SyncE LAN ports in a 1U chassis

- Integrates GNSS

- 1+1 redundant AC/DC PSU

- Front access

| Processor | (1) Intel® Xeon® 6 processor, up to TDP 325W | Networking |

SKU - #1 (16) 25GbE SFP28 (LoM) SKU - #2 (24) 25GbE SFP28 (LoM & Intel Carter Flat) |

Accelerator | NVIDIA L4 GPU | Storage | (2) SATA/NVMe M.2 22110/2280 SSDs | Expansion Slot |

SKU - #1 (1) FHHL PCIe 5.0 slot SKU - #2 (1) OCP 3.0 PCIe 5.0 slot for Intel Carter Flat |

Dimensions | (W) 447.8 x (H) 42.8 x (D) 300.65mm |

QuantaEdge EGX77B-1U

- Powered by a single 5th/4th Gen Intel® Xeon® Scalable processor

- 300mm ultra short–depth design

- NEBS GR63 Level 3 compliant (GR3108 Class 2 optional)

- Operating temperature between -5°C ~ 55°C (-40°C ~ 65°C optional)

- All LOM support 1588 + SyncE

- Thermal optimization

- PFR function reserved

| Processor | (1) 5th/4th Gen Intel® Xeon® Scalable processor, up to 250W TDP | Networking |

SKU - #1 (8) 25GbE w/ Sync-E, NCSI SKU - #2 (4) 25GbE and (8) 10GbE w/ Sync-E, NCSI |

Accelerator | NVIDIA L4 GPU | Storage | (2) SATA/NVMe M.2 2280 SSDs | Expansion Slot | (1) FHHL PCIe 5.0 x16 slot | Dimensions | (W) 447.8 x (H) 42.8 x (D) 300.65mm (ear to rear wall) |

QuantaEdge EGX74I-1U

- Powered by a single 5th/4th Gen Intel® Xeon® Scalable processor

- 400mm ultra short-depth design

- NEBS GR63 Level 3 compliant (GR3108 Class 2 optional)

- Operating temperature between -5°C ~ 55°C (-40°C ~ 65°C optional)

- SMA connections reserved

- PFR function reserved

| Processor | (1) 5th/4th Gen Intel® Xeon® Scalable processor, up to 250W TDP | Networking |

(4) 25GbE SFP28 ports (NCSI) (1) 1GbE RJ45 management port |

Accelerator | NVIDIA L4 GPU | Storage |

SKU - #1 (2) SATA/NVMe M.2 2280 SSDs SKU - #2 (2) SATA/NVMe M.2 2280 SSDs (2) 2.5" U.2 SSDs |

Expansion Slot |

SKU - #1 (2) FH3/4L PCIe 5.0 x16 slots (1) FHHL PCIe 5.0 x16 slot SKU - #2 (2) FH3/4L PCIe 5.0 x16 slots |

Dimensions | (W) 447.8 x (H) 42.8 x (D) 400mm |

Watch videos

QCT Cutting-Edge Infrastructures

Accelerated by NVIDIA

Unleashing the Power of NVIDIA HGX™

for AI and HPC

QCT NVIDIA MGX™ Systems

Official Overview